You’ll have to get to the end of this newsletter to know the full truth 🙂

Artificial Intelligence is increasingly being used in a variety of fields, from medicine to manufacturing. And journalism is no exception. AI is already being used by some news organizations to help with the writing and editing of articles. For example, the Associated Press uses an AI system called Wordsmith to generate stories about corporate earnings. And Quartz uses a similar system to write brief summaries of business news stories. While these systems are currently limited to fairly simple tasks, there is no reason why they couldn't be expanded to handle more complex writing assignments in the future.

Artificial intelligence is increasingly being used by writers to create and develop stories. By analyzing large data sets, AI can identify patterns and trends that can be used to generate new ideas and plotlines. In addition, AI can be used to identify flaws in a story, such as plot holes or inconsistencies, and suggest ways to fix them. AI can also be used to generate realistic characters and dialogue, making it easier for writers to create believable storylines. As AI technology continues to evolve, it is likely that even more writers will begin to use it to develop their stories.

That said, it's important to remember that Artificial Intelligence is not yet capable of independent thought or creative storytelling. For now, AI can only assist journalists in their work - it cannot replace them entirely. In the future, AI may well become an invaluable tool for reporters and editors. But as long as there are people who are passionate about telling stories, there will always be a need for human journalists.

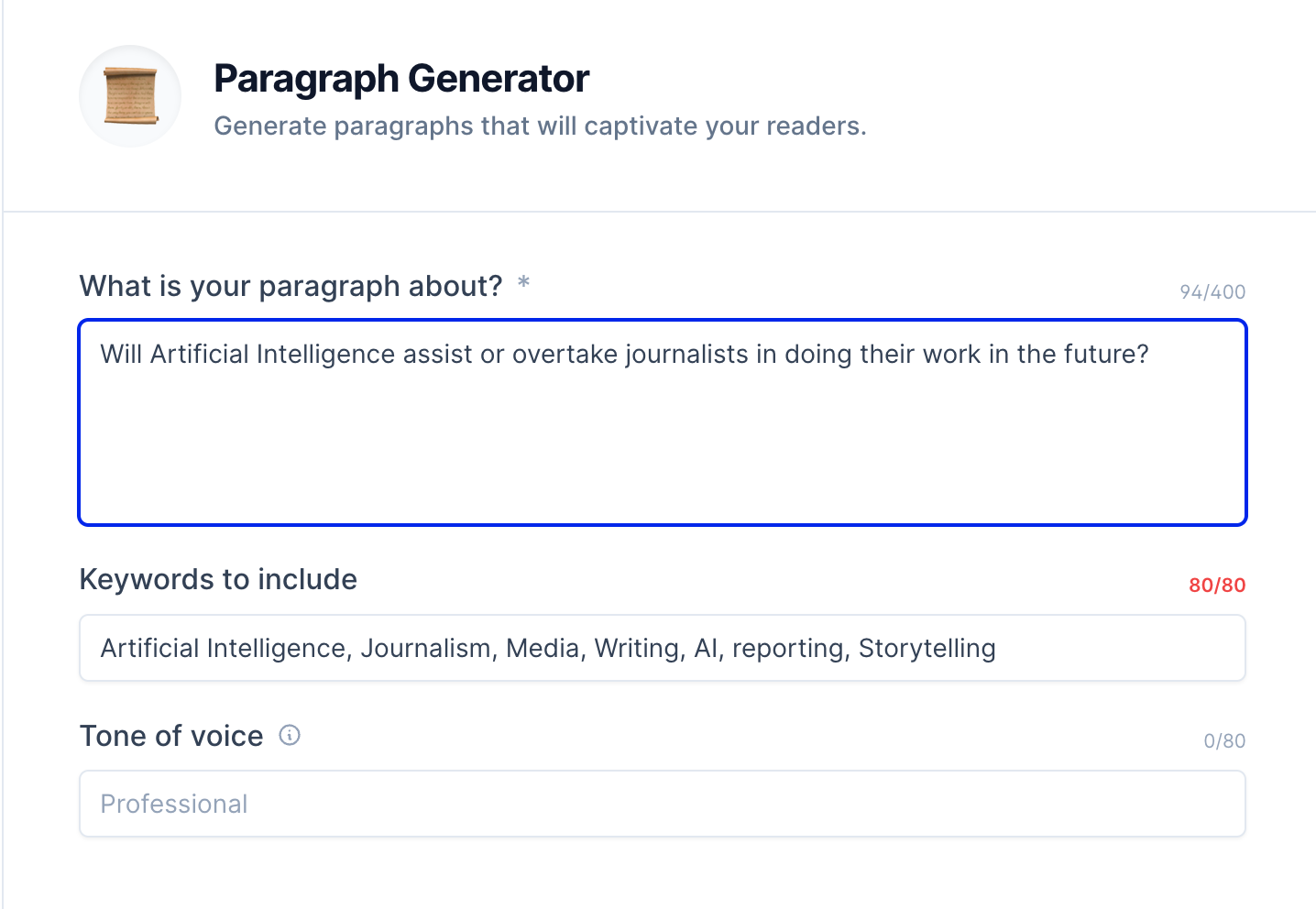

We didn’t write 👆 this text that’s in bold, AI did. We tested it out with a free trial of Jasper, a tool that uses AI to write a paragraph in a matter of seconds. All we had to do was give it a writing prompt, and tell it which keywords we wanted it to use. In this case, we gave it two prompts: “Will Artificial Intelligence assist or overtake journalists in doing their work in the future?” and “How do writers use Artificial Intelligence?”

The idea to try this out came after we read this piece in The Verge about how fiction authors are using AI to write their books. It’s a nuanced piece that looks at how AI can be used as a tool to help fiction writers overcome challenges like writer’s block, or looming deadlines with thousands of words left to write. Both challenges are something that journalists can relate to.

One of the key take-aways of the piece is that AI-written content doesn’t erase the need for human intervention. The fiction writers using the tools had to come up with what prompts to give the AI, and figuring out how to get the AI to write something the way you want it written can take a bit of experimentation. And the writers couldn’t just publish whatever the AI came up with, they still had to edit the text to make it fit their narratives.

Anyone using AI also has to be cautious and fact-check the AI’s writing, because it can easily introduce misinformation. For example, in the text we generated in Jasper, we know that Quartz has an AI studio, but we couldn’t verify that the publication is currently using it to “write brief summaries of business news stories,” as the AI wrote. The AI also wrote (in a sentence that we omitted because the misinformation was too glaringly obvious) that “Artificial intelligence has been used in writing for centuries, with early examples dating back to the 16th century.”

Artificial Intelligence only really kicked off in the mid-20th century, though the idea had appeared in science fiction before. And most people would probably have figured out that AI hadn’t been around for centuries. But not all misinformation written by AI is so easy to uncover.

Despite the risks of AI introducing errors into writing, Josh Dzieza, the author of The Verge piece suggests that knowing the flaws of AI may help people be more cautious about what they read on the internet — especially knowing that AI is being used more and more.

“It might not be such a bad thing to have to apply a Turing test to everything I read, particularly in the more commercialized marketing-driven corners of the internet where AI text is most often deployed,” he wrote. “The questions it made me ask were the sorts of questions I should be asking anyway: is this supported by facts, internally consistent, and original, or is it coasting on pleasant-sounding language and rehashing conventional wisdom?”

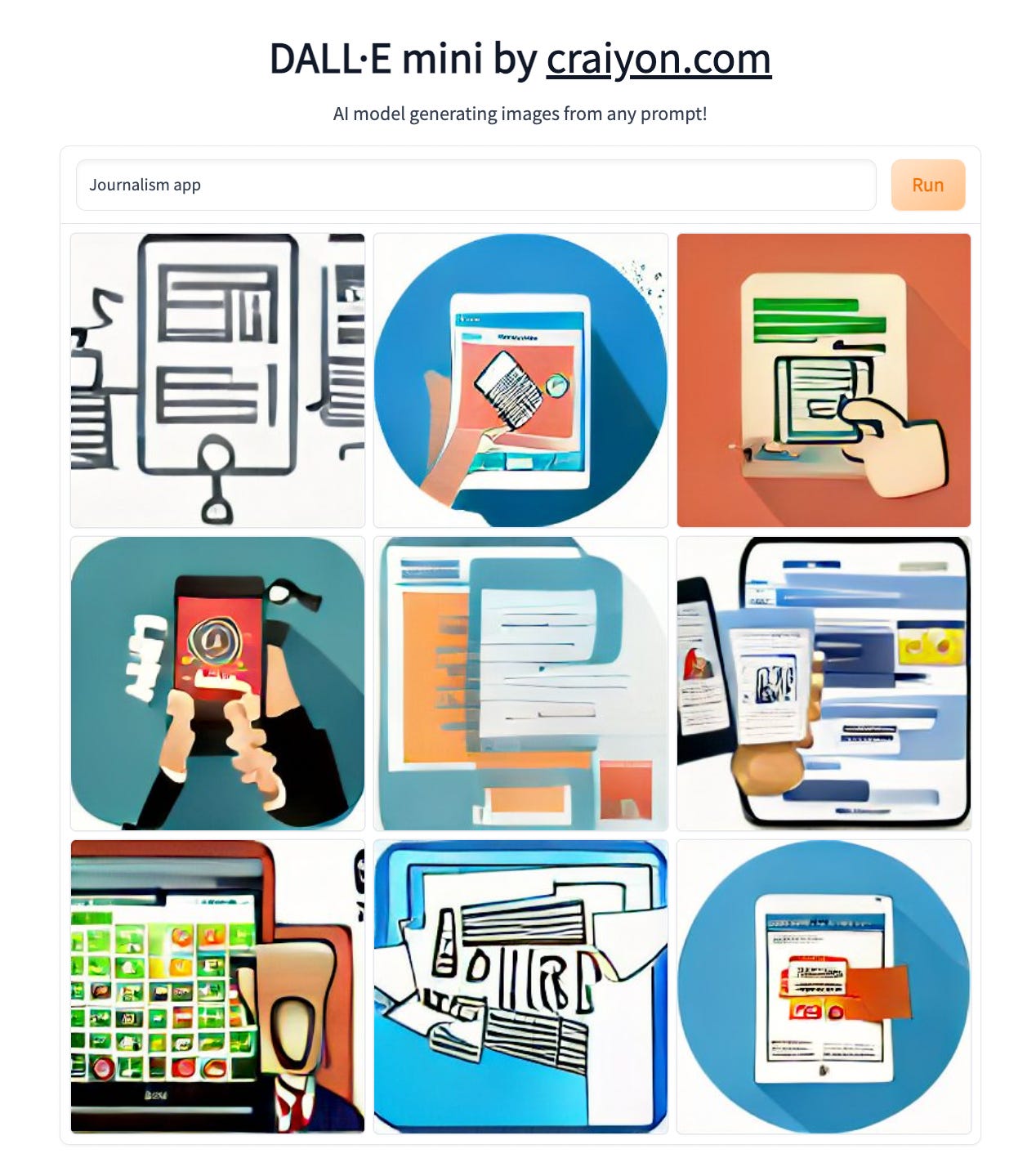

There’s also an argument that AI offers new ways to be creative, like the super popular DALL·E 2 which generates art when fed prompts by humans. But who gets the credit for the image? The AI? Or the person who came up with the combination of words to prompt the AI? This video by Vox does a great job of delving into this question:

The art at the top of this piece was made using DALL·E Mini, which functions in a similar way to DALL·E 2. Here’s what the AI did when we prompted it with “journalism app.”

On a philosophical level, it is interesting to see what AI does with the prompts we give it. All of these AI models are built by humans and trained using images created or captured by humans that are posted online. So when an AI generates a result, the output is in many ways a reflection of us — even if that reflection is often very flawed.

And while we know AI probably won’t replace journalism (we still need editing and fact-checking and original reporting), it might make us think about new ways to do our jobs.